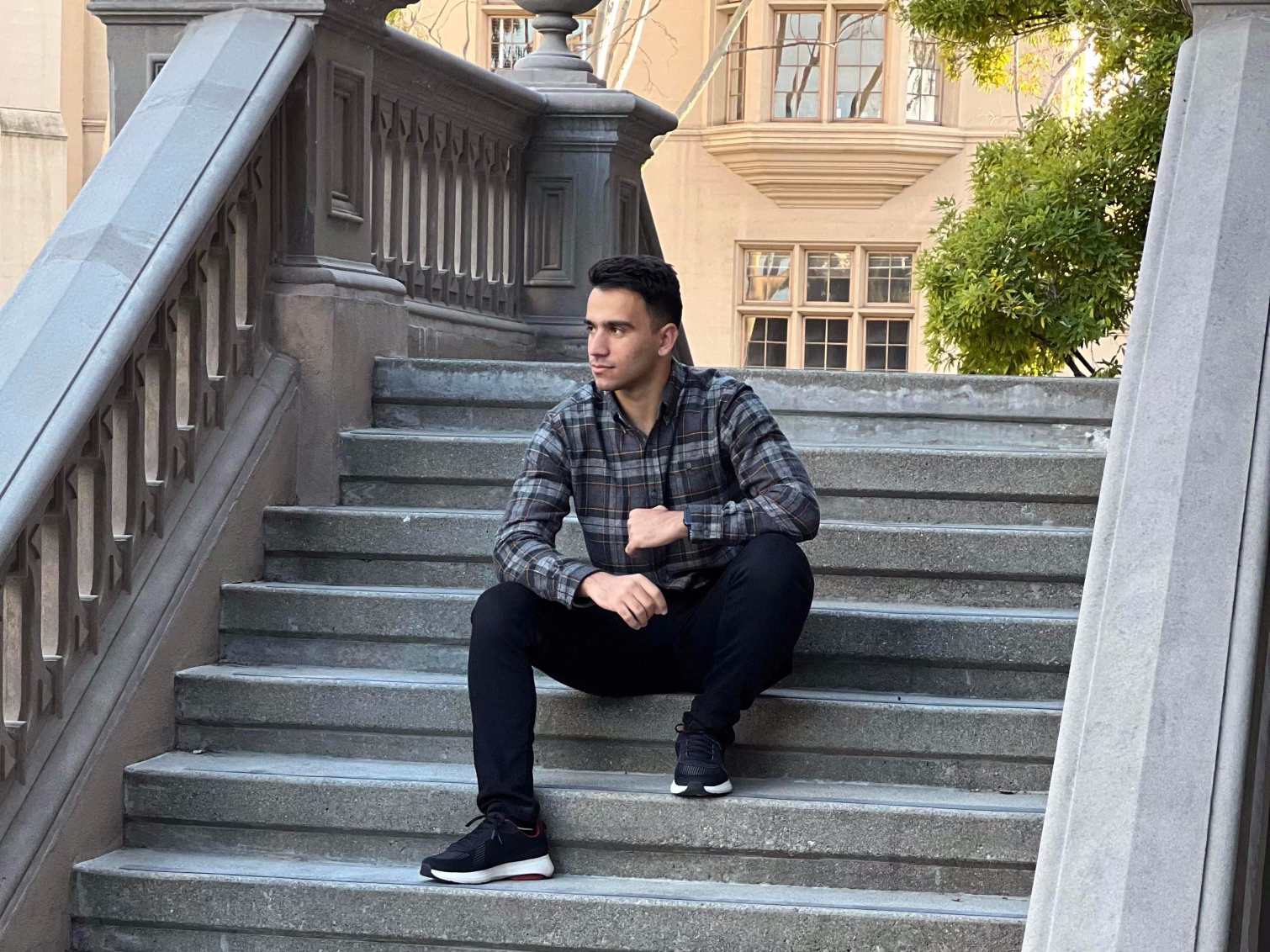

About Me

Hello there 👋

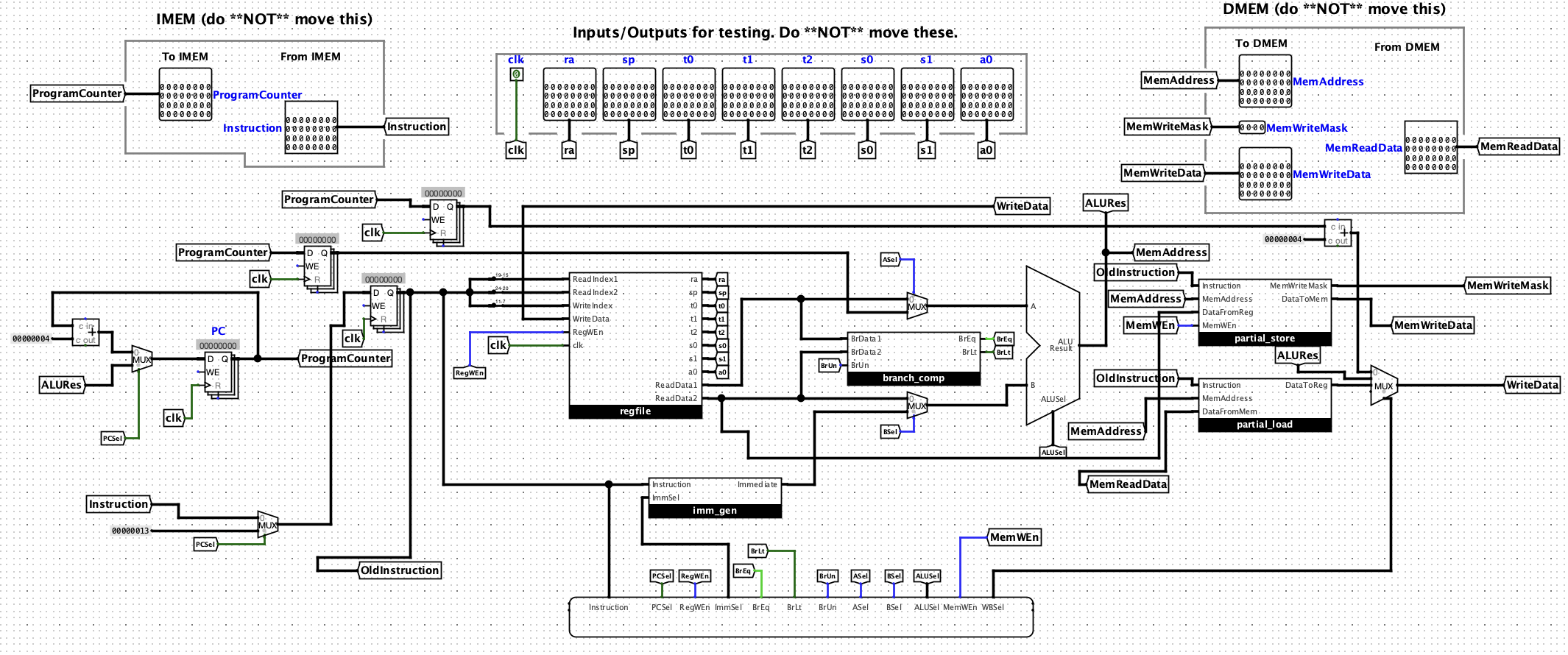

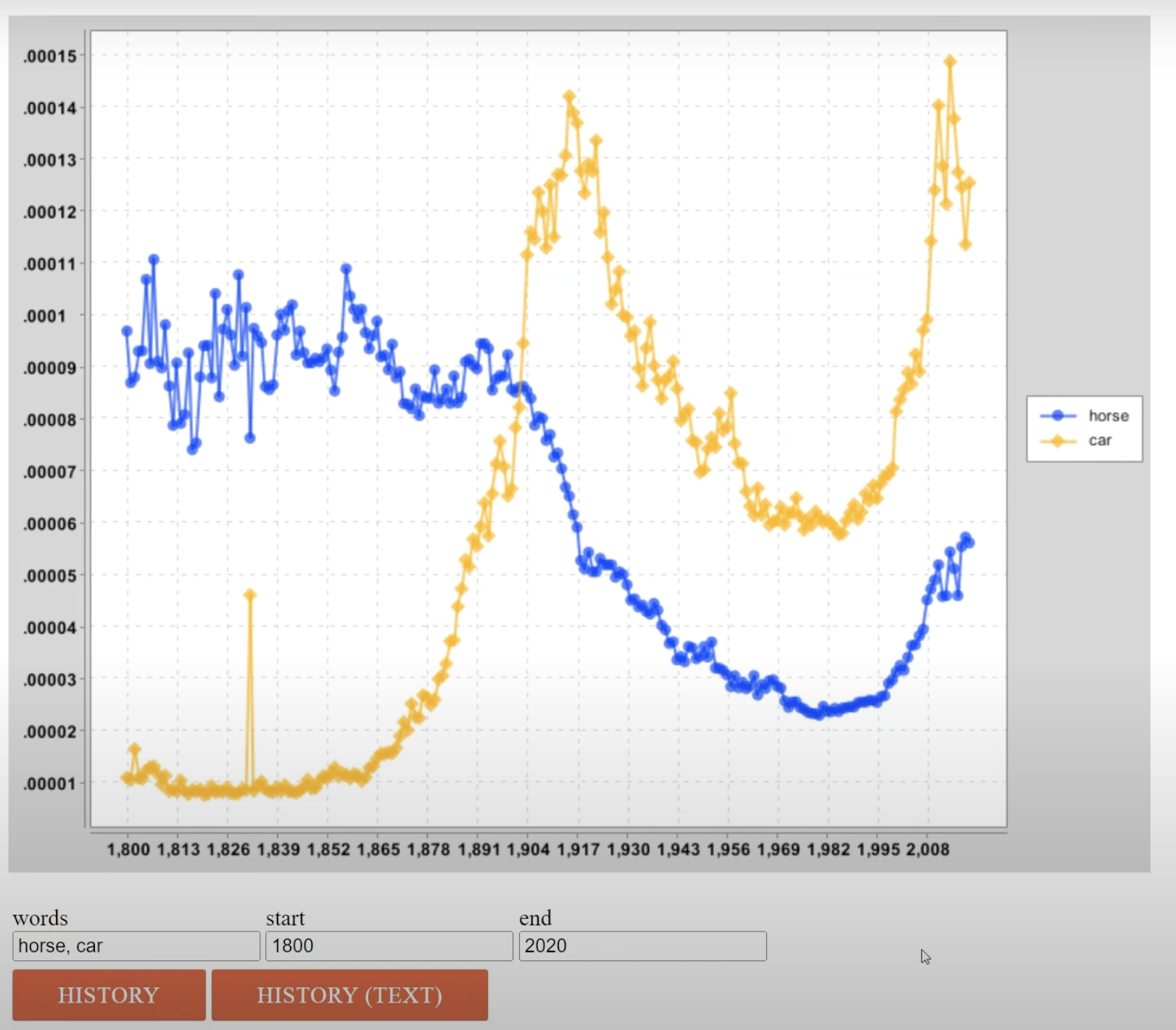

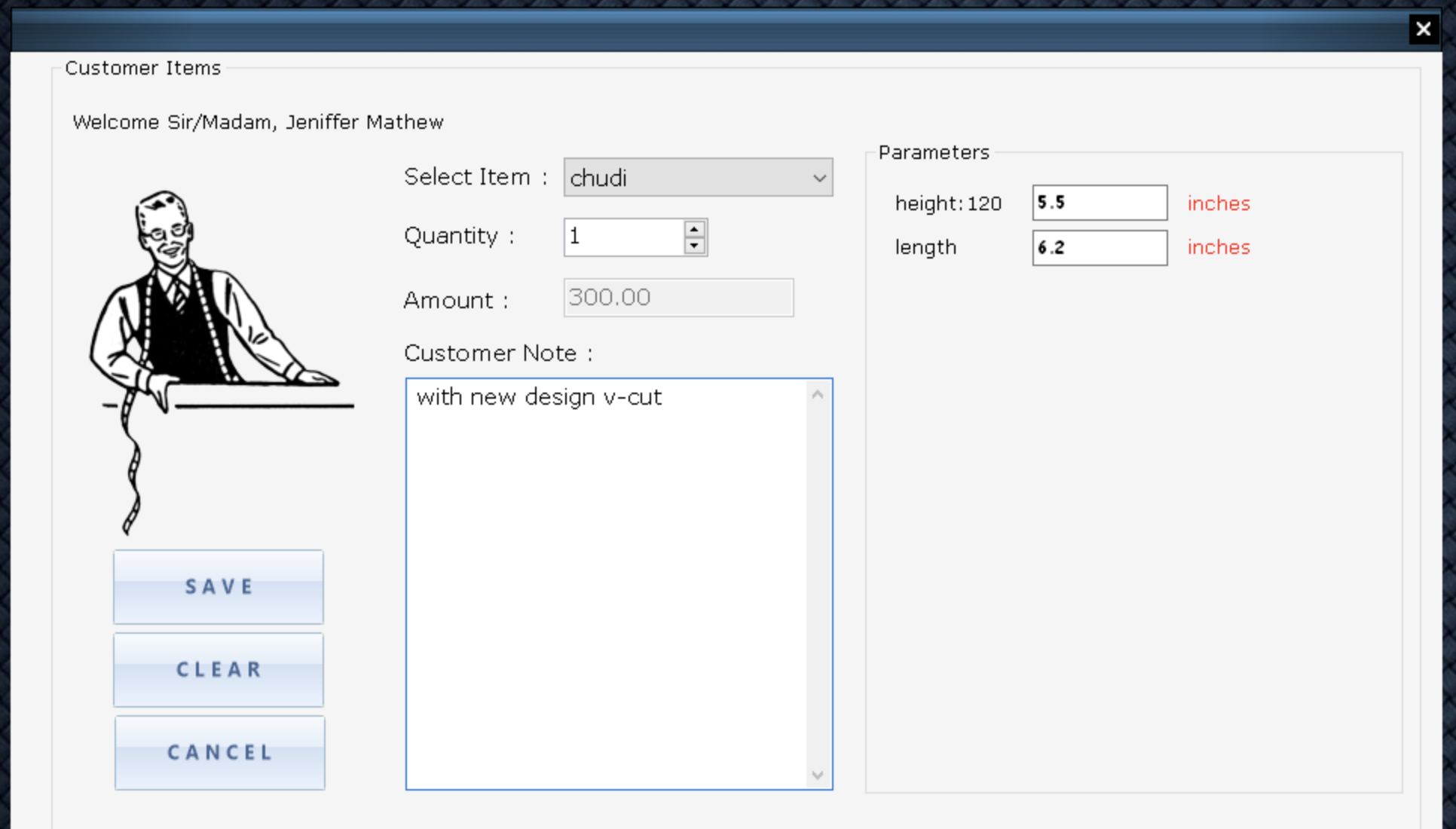

I am Ahmad Melad Mayaar, I go by "Melad," and I'm obsessed with optimizing life, whether it's through writing efficient code, hitting personal bests at the gym or scoring goals. I currently work full time as a Systems Engineer with a tech company in the city of Anaheim, CA. I graduated from Berkeley, with a B.A. in Data Science and minor in Computer Science in May of 2024. I spent my college days cleaning big datasets, testing software, building and deploying Machine Learning models and coding AI driven Pac-Man, but outside of that, you could find me weightlifting, hanging out with friends, or on the soccer field.

Career and research wise, I am particularly interested in:

- hardware–software co-design

- System AI

- Software Engineering with a great focus on Data Analytics

- Effective Deep learning HCI for models not limited to LLM

I am on the hunt of full time employment and research as well as exciting projects in the fields mentioned above, if you got any cool ideas, Let’s chat!Contact: You can contact me via email at ahmadmeladmayaar@gmail.com or you can connect with me through LinkedIn (linked below). I'm looking forward to communicating with you!

.png)